Prof. LI Hai’s team from the Hefei Institutes of Physical Science of the Chinese Academy of Sciences, conducted a systematic evaluation of the reliability of speech acoustic features across consumer-grade mobile devices in a healthy population. They concluded that frequency-related speech features demonstrate high reliability across these devices.

Their findings, published in Behavior Research Methods, provide critical data support for advancing remote speech assessment technologies.

Remote speech-based cognitive assessment is an emerging technology that uses internet-connected devices to analyze speech for evaluating cognitive function. This method offers convenience, simplicity, and accuracy, making it a promising tool for monitoring cognitive decline in older adults and mental health in adolescents. However, achieving reliable results requires consistent performance across different devices and repeated measurements, an area that has been minimally explored in remote settings.

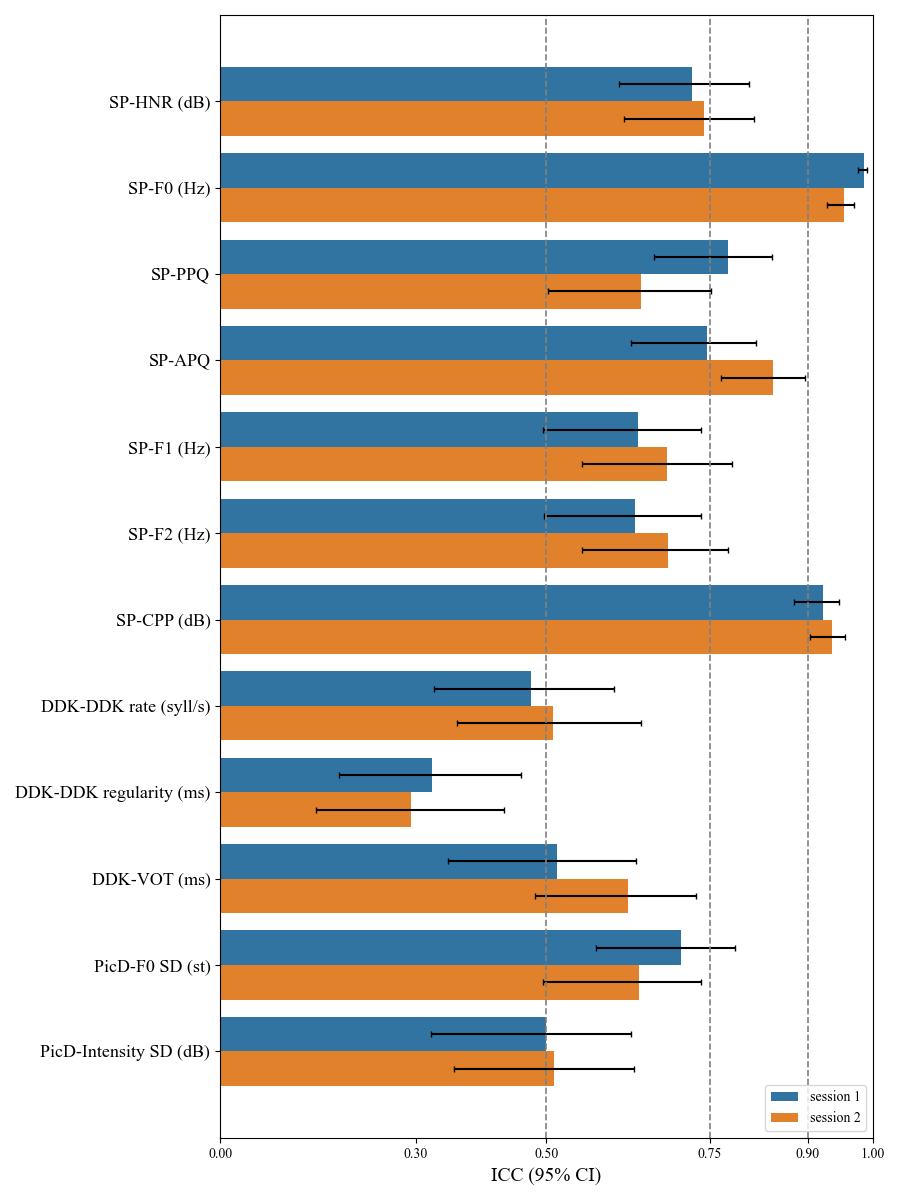

To address this challenge, the team assessed the cross-device and test-retest reliability of speech acoustic features using four common consumer-grade devices: digital voice recorders, laptops, tablets, and smartphones. Their research found that frequency-related features, such as fundamental frequency and cepstral peak prominence, demonstrated high reliability across different devices and measurements, making them suitable for remote assessment. In contrast, more complex features, like syllable rate and regularity, showed lower reliability.

The study also emphasizes the importance of standardized protocols for data collection and analysis to improve the reliability of remote speech assessments. Additionally, it suggests that improving algorithms could help consumer-grade devices handle complex acoustic features more accurately in noisy environments.

These insights offer essential guidance for optimizing remote speech-based assessment technologies and promoting their applications in diverse contexts, such as cognitive evaluation in aging populations and mental health monitoring in adolescents.

Cross-device reliability of Acoustic Features in Remote Speech Assessment (Image by YANG Lizhuang)